In 2002, as part of my undergrad in computer science, I took a course in “Artificial Intelligence”. It was a “restricted elective” – you had to either take that or another course called “Artificial Neural Networks”. That Neural Networks was then considered disjoint from AI will tell you how the field of computer science has changed in the 15 years since I graduated.

In any case, as part of our course on AI, we learnt heuristics. These were approximate algorithms to solve a problem – seldom did well in terms of worst case complexity but in most cases got the job done. Back then, the dominant discourse was that you had to tell a computer how to solve a problem, not just show it a large number of positive and negative examples and allow it to learn by itself (though that was the approach taken by the elective I did not elect for).

One such heuristic was Simulated Annealing. The problem with a classic “hill climbing” algorithm is that you can get caught in local optima. And the deterministic hill climbing algorithm doesn’t let you get off your local optima to search for better optima. Hence there are variants. In Simulated Annealing, in the early part of the algorithm you are allowed to take big steps down (assuming you are trying to find the peak). As the algorithm progresses, it “cools down” (hence simulated annealing) and the extent to which you are allowed to climb down is massively reduced.

It is not just in algorithms, or in the case of AI, do we get stuck in local optima. In a recent post, I had made a passing reference to a paper about the tube strikes of 2014.

It is clearly visible from the two panels that far fewer commuters were able to use their modal station during the strike, which implies that a substantial number of individuals were forced to explore alternative routes. The data also suggest that the strike brought about some lasting changes in behaviour, as the fraction of commuters that made use of their modal station seemingly drops after the strike (in the paper we substantiate this claim econometrically).

Screw the paper if you don’t want to read it. Basically the concept is that the strike of 2014 shook things up. People were forced to explore alternatives. And some alternatives stuck. In other words, a lot of people had got stuck in local maxima. And when an external event (the strike) pushed them off their local pedestals (figuratively speaking), they were able to find better maxima.

And that was only the result of a three-day strike. Now, the pandemic has gone on for 5-6 months now (depending on the part of world you are in). During this time, a lot of behaviour otherwise considered normal have been questioned by people behaving thus. My theory is that a lot of these hitherto “normal behaviours” were essentially local optima. And with the pandemic forcing people to rethink their behaviours, they will find better optima.

I can think of a few examples from my own life.

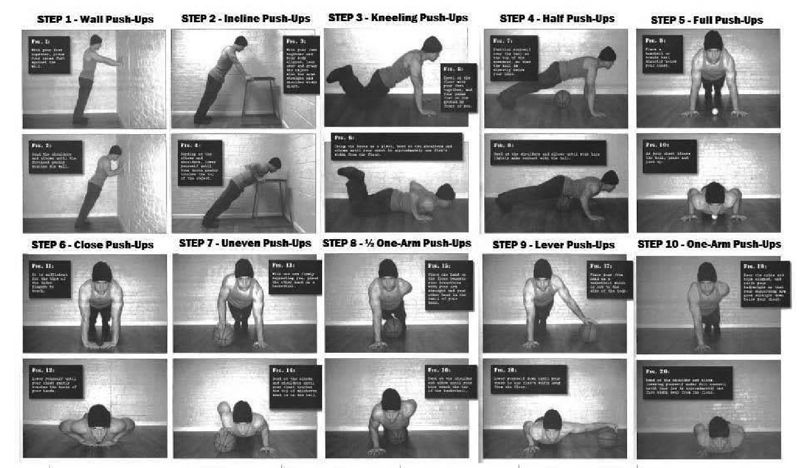

- I wrote about this the other day. I had gotten used to a schedule of heavy weight lifting for my workouts. I had plateaued in all my lifts, and this meant that my upper body had plateaued at a rather suboptimal level. However much I tried to improve my bench press and shoulder press (using only these movements) the bar refused to budge. And my shoulders refused to get bigger. I couldn’t do a (palms facing away) pull up.

Thanks to the pandemic, the gym shut, and I was forced to do body weight exercises at home. There was a limit on how much I could load my legs and back, so I focussed more on my upper body, especially doing different progressions of the pushup. And back in the gym today, I discovered I could easily do pullups now.

Thanks to the pandemic, the gym shut, and I was forced to do body weight exercises at home. There was a limit on how much I could load my legs and back, so I focussed more on my upper body, especially doing different progressions of the pushup. And back in the gym today, I discovered I could easily do pullups now.

Similarly, the progression of body weight squats I knew forced me to learn to squat deep (hamstrings touching calves). Today for the first time ever I did deep front squats. This means in a few months I can learn to clean.

- I was used to eating Milky Mist set curd (the one that comes in a 1kg box). It was nice and creamy and I loved eating it. It isn’t widely available and there was one supermarket close to home from where I could get it. As soon as the lockdown happened that supermarket shut. Even when it opened it had long lines, and there were physical barricades between my house and that so I couldn’t drive to it.

In the meantime I figured that the guy who delivers milk to my door in the morning could deliver (Nandini) curd as well. And I started buying from him. Well, it’s not as creamy as Milky Mist, but it’s good enough. And I’m not going back.

- This was a see-saw. For the first month of the lockdown most bakeries nearby were shut. So I started trying out bread at this supermarket close to home (not where I got Milky Mist from). I loved it. Presently, bakeries reopened and the density of cases in Bangalore meant I became wary of going to supermarkets. So now we’ve shifted back to freshly baked bread from the local bakery

- I’d tried intermittent fasting several times in life but had never been able to do it on a consistent basis. In the initial part of the lockdown good bread was hard to come by (since the bakeries shut and I hadn’t discovered the supermarket bread yet). There had been a bird flu scare near Bangalore so we weren’t buying eggs either. What do we do for breakfast? Just skip it. Now i have no problem not having breakfast at all

The list goes on. And I’m sure this applies to you as well. Think of all the behavioural changes that the pandemic has forced on you, and think of which all you will go back on once it has passed. There is likely to be a set of behavioural changes that won’t change back.

Like how one in 20 passengers who changed routes following the 2014 tube strikes never went back to their earlier routes. Except that this time it is a 6-month disruption.

What this means is that even when the pandemic is past us, the economy will not look like the economy that was before the pandemic hit us. There will be winners and losers. And since it will take time and effort for people doing “loser jobs” to retrain themselves (if possible) to do “winner jobs”, the economic downturn will be even longer.

I’m calling it the “tube strike mental model” for behavioural change during the pandemic.