So a group of statisticians (from Cyprus and Greece) have written an easy-to-read paper comparing statistical and machine learning methods in time series forecasting, and found that statistical methods do better, both in terms of accuracy and computational complexity.

To me, there’s no surprise in the conclusion, since in the statistical methods, there is some human intelligence involved, in terms of removing seasonality, making the time series stationary and then using statistical methods that have been built specifically for time series forecasting (including some incredibly simple stuff like exponential smoothing).

Machine learning methods, on the other hand, are more general purpose – the same neural networks used for forecasting these time series, with changed parameters, can be used for predicting something else.

In a way, using machine learning for time series forecasting is like using that little screwdriver from a Swiss army knife, rather than a proper screwdriver. Yes, it might do the job, but it’s in general inefficient and not an effective use of resources.

Yet, it is important that this paper has been written since the trend in industry nowadays has been that given cheap computing power, machine learning be used for pretty much any problem, irrespective of whether it is the most appropriate method for doing so. You also see the rise of “machine learning purists” who insist that no human intelligence should “contaminate” these models, and machines should do everything.

By pointing out that statistical techniques are superior at time series forecasting compared to general machine learning techniques, the authors bring to attention that using purpose-built techniques can actually do much better, and that we can build better systems by using a combination of human and machine intelligence.

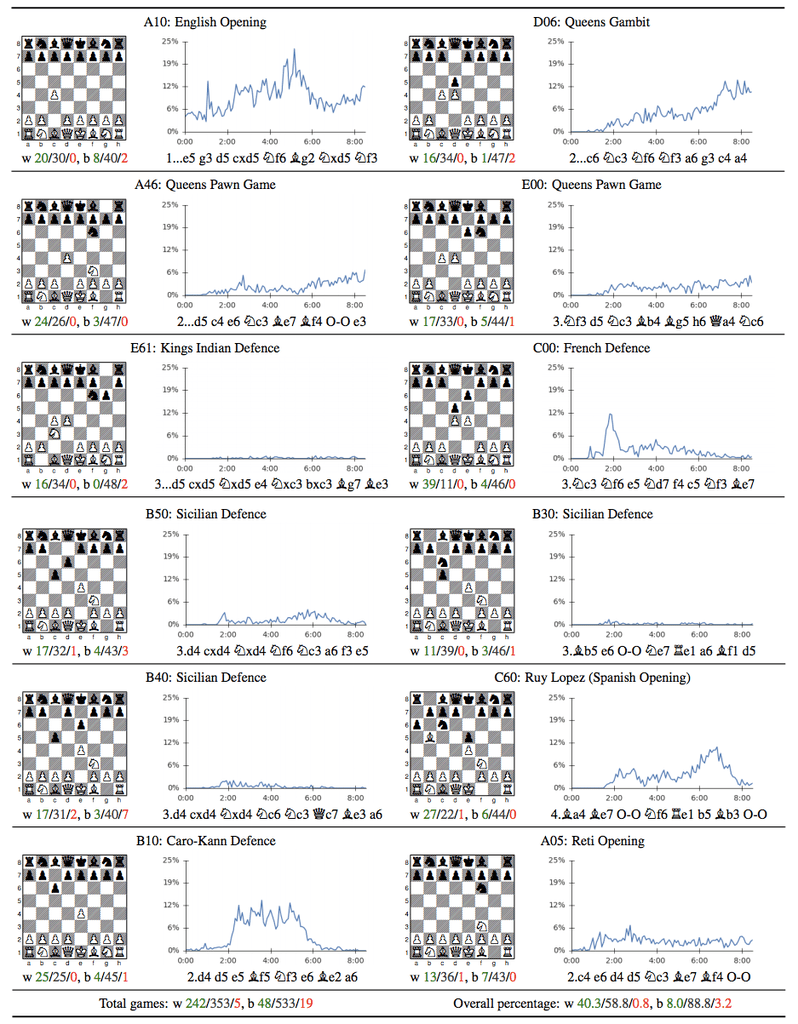

They also helpfully include this nice picture that summarises what machine learning is good for, and I wholeheartedly agree:

The paper also has some other gems. A few samples here:

Knowing that a certain sophisticated method is not as accurate as a much simpler one is upsetting from a scientific point of view as the former requires a great deal of academic expertise and ample computer time to be applied.

[…] the post-sample predictions of simple statistical methods were found to be at least as accurate as the sophisticated ones. This finding was furiously objected to by theoretical statisticians [76], who claimed that a simple method being a special case of e.g. ARIMA models, could not be more accurate than the ARIMA one, refusing to accept the empirical evidence proving the opposite.

A problem with the academic ML forecasting literature is that the majority of published studies provide forecasts and claim satisfactory accuracies without comparing them with simple statistical methods or even naive benchmarks. Doing so raises expectations that ML methods provide accurate predictions, but without any empirical proof that this is the case.

At present, the issue of uncertainty has not been included in the research agenda of the ML field, leaving a huge vacuum that must be filled as estimating the uncertainty in future predictions is as important as the forecasts themselves.