Back when I was at the International Maths Olympiad Training Camp in Mumbai in 1999, the biggest insult one could hurl at a peer was to describe the latter’s solution to a problem as being a “brute force solution”. Brute force solutions, which were often ungainly, laboured and unintuitive were supposed to be the last resort, to be used only if one were thoroughly unable to implement an “elegant solution” to the problem.

Mathematicians love and value elegance. While they might be comfortable with esoteric formulae and the Greek alphabet, they are always on the lookout for solutions that are, at least to the trained eye, intuitive to perceive and understand. Among other things, it is the belief that it is much easier to get an intuitive understanding for an elegant solution.

When all the parts of the solution seem to fit so well into each other, with no loose ends, it is far easier to accept the solution as being correct (even if you don’t understand it fully). Brute force solutions, on the other hand, inevitably leave loose ends and appreciating them can be a fairly massive task, even to trained mathematicians.

In the conventional view, though, non-mathematicians don’t have much fondness for elegance. A solution is a solution, and a problem solved is a problem solved.

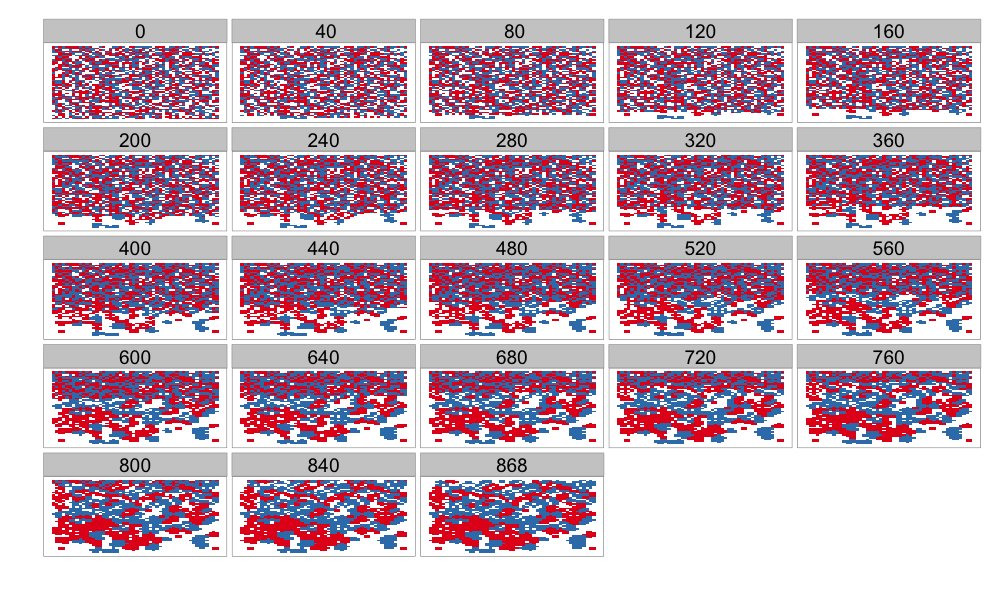

With the coming of big data and increased computational power, however, the tables are getting turned. In this case, the more mathematical people, who are more likely to appreciate “machine learning” algorithms recommend “leaving it to the system” – to unleash the brute force of computational power at the problem so that the “best model” can be found, and later implemented.

And in this case, it is the “half-blood mathematicians” like me, who are aware of complex algorithms but are unsure of letting the system take over stuff end-to-end, who bat for elegance – to look at data, massage it, analyse it and then find that one simple method or transformation that can throw immense light on the problem, effectively solving it!

The world moves in strange ways.